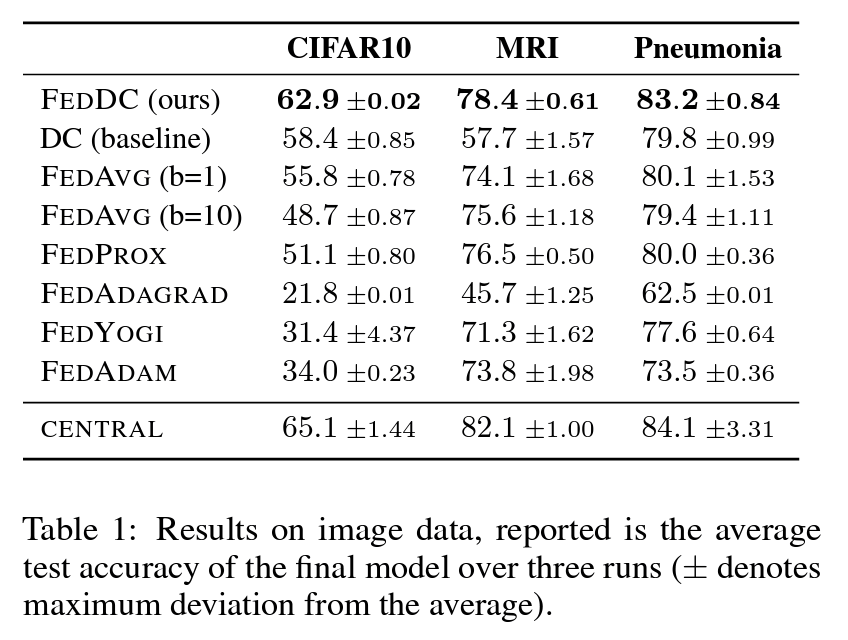

We presented our work on layer-wise linear mode connectivity at ICLR 2024 let by Linara Adilova, with Maksym Andriushchenko, Michael Kamp, Asja Fischer and Martin Jaggi.

We know that linear mode connectivity doesn’t hold for two independently trained models. But what about *layer-wise* LMC? Well, it is very different!

We investigate layer-wise averaging and discover that for multiple networks, tasks, and setups averaging only one layer does not affect the performance! This is inline with the research showing that re-initialization of individual layers does not change accuracy.

Nevertheless, is there some critical amount of layers needed to be averaged to get to a high loss point? It turns out that barrier-prone layers are concentrated in the middle of a model.

Is there a way to gain more insights on this phenomenon? Let’s see how it looks like for a minimalistic example of a deep linear network. Ultimately, linear network is convex with respect to any of its layer cuts.

Can robustness explain this property, i.e., all the neural networks have a particular weight changes robustness that allows to compensate for one layer modifications? For some layers the answer is yes, it is indeed much harder to get to a high loss for a more robust model.

It also means that we cannot treat random directions as uniformly representative of the loss surface: our experiment shows particular subspaces to be more stable than others. Especially, single layer subspaces have a different tolerance to noise!